Data quality is a critical factor in any organization that relies on data to make decisions, yet finding a compelling use case to justify investment in data quality improvement projects can be challenging. While studies show that the average company loses $15 million annually due to poor data quality, it’s difficult to convince business sponsors to allocate resources based on industry averages alone.

To secure buy-in for data quality initiatives, it’s essential to move beyond general statistics and identify specific use cases within your organization that demonstrate the tangible impact of poor data quality. This involves pinpointing areas where data quality issues are hindering operations, affecting business users, and ultimately impacting the bottom line. By showcasing concrete examples of how data quality improvements can address these challenges, you can build a strong case for investment and drive meaningful change within your organization.

The Process Overview

Finding a compelling use case for data quality isn’t as difficult as it might seem. It primarily requires engaging the right stakeholders and following a straightforward process. In this article, we present a seven-step approach to help you identify and articulate a strong data quality use case within your organization.

- Identify Key Data Domains and Assets: Begin by pinpointing the most crucial data areas for your business and the valuable data they contain. Understand how this data is used to make key business decisions and which datasets are considered the most important.

- Gather Insights from Users: Talk to the people who use the data every day. Engage with business users, data owners, and analysts to understand the challenges they face due to data quality issues. This will help you identify specific examples of how poor data quality impacts their work.

- Prioritize Data Quality Assessment: Based on your discussions with users, select a few essential datasets with known or potential data quality issues. Focus on the datasets that have the biggest impact on business operations and where improvements will yield the greatest benefits.

- Profile Data Assets: Analyze the data to uncover common quality issues and validate the concerns raised by users. This will give you a clear picture of the types and severity of data quality problems within your chosen datasets.

- Calculate the Cost of Poor Data Quality: Go beyond identifying the issues and quantify their impact. Determine how data quality problems affect your business operations in terms of financial losses, missed opportunities, or decreased efficiency.

- Estimate the Investment in Data Quality: Outline the resources required to address the data quality issues you’ve identified. This includes the time commitment of data stewards, data engineers, data owners, and the cost of any necessary data quality tools.

- Build a Compelling Case for Data Quality: Bring it all together by comparing the cost of poor data quality with the investment needed for improvement. Develop a clear and concise justification for investing in data quality, highlighting the potential return on investment and the benefits to the organization.

Identify Key Data Domains and Assets

To build a strong case for data quality, start by identifying a data domain that plays a crucial role in making your business data-driven. This could be an area heavily reliant on accurate and timely data for decision-making, such as:

- Production Planning: Where precise data on inventory, demand, and production capacity is essential to optimize manufacturing processes.

- Marketing Campaign Optimization: Where accurate data on campaign performance, customer behavior, and market trends informs budget allocation and strategy.

- Customer Churn Prevention: Where timely data on customer engagement, satisfaction, and feedback helps identify at-risk customers and proactively address their needs.

This process requires a solid understanding of your organization’s data landscape. You’ll need access to data sources and a basic understanding of the data model and how data flows through various business processes. Pay close attention to the data lifecycle, as each stage can impact data quality:

- Data Collection: How is data gathered? What are the sources? Are there potential issues with data entry or automated collection methods?

- Data Storage: Where is the data stored? Are there multiple copies or versions of the data? How is data security and access managed?

- Data Processing: How is data cleaned, transformed, and prepared for analysis or use in AI algorithms? Are there potential points of failure or inconsistencies in the data pipeline?

Once you understand the purpose and flow of data within a specific domain, identify the most critical datasets that drive key business decisions. These datasets will become the focus of your data quality assessment. Finally, identify the data owners and stakeholders associated with these assets, as they will be crucial in your next step.

Gather Insights from Users

While understanding the data landscape is crucial, it’s equally important to gather insights from the people who use the data every day. Business users who rely on data for decision-making often have firsthand experience with the consequences of poor data quality. They can provide valuable examples of how inaccurate, incomplete, or outdated data has impacted their work and hindered their ability to achieve business objectives.

However, not all business users may possess the technical expertise to articulate data quality issues in detail. In such cases, engage with data stewards, data analysts, or application owners who have a deeper understanding of the data and its limitations. These individuals can offer more technical insights into the specific types of data quality problems that exist and their potential root causes.

When interviewing these stakeholders, encourage them to share specific instances where they encountered data quality issues. Ask questions like:

- “Can you recall a time when you had to deal with a data quality problem that affected your work?”

- “Have you ever been unable to complete a task or make a decision because of missing, outdated, or incorrect data?”

- “What specific challenges have you faced due to data quality issues?

These data stakeholders are your best source of information about past and present data quality challenges. Their involvement is crucial not only for identifying use cases but also for securing buy-in for your data quality initiatives. These stakeholders often have influence over budget allocation and project approval, so having them on your side from the beginning increases the likelihood of project success. Furthermore, they can help prioritize your efforts by highlighting the most critical datasets that need immediate attention.

Prioritize Data Quality Assessment

After interviewing data stakeholders and understanding their pain points, you’ll have a clearer picture of your organization’s data quality landscape. You’ll know which data domains and assets are perceived as less reliable and which ones have the greatest impact on business processes. These insights are crucial for prioritizing your data quality efforts.

Remember, initial data quality initiatives should focus on quick wins. Target data domains and assets that demonstrate both low reliability and high business impact. Addressing these areas first will deliver the most significant improvements and build momentum for future data quality projects.

Your interviews will also reveal how data quality issues have affected business users and provide valuable insights into their expectations for data quality. Understanding their definition of “good” data is essential for aligning your efforts with business needs.

Based on this information, select a few data domains and their associated assets for further validation. These are the datasets where you’ll conduct in-depth profiling to confirm the suspected data quality issues and gather detailed evidence for your use case.

Profile Data Assets

Data profiling is the process of examining the data within your data assets, such as tables, files, or databases, to understand its structure, content, and overall quality. It involves collecting statistics, identifying patterns, and assessing data characteristics to uncover potential issues and gain a comprehensive view of your data.

A thorough data profiling process, often called a data quality assessment, goes beyond simply reviewing sample values. It includes rigorous testing with data quality checks, which are validation rules that determine if the data meets predefined quality standards. For example, a data quality check might verify that a “business_phone” column contains valid phone numbers in the correct format and that this information is present for all records.

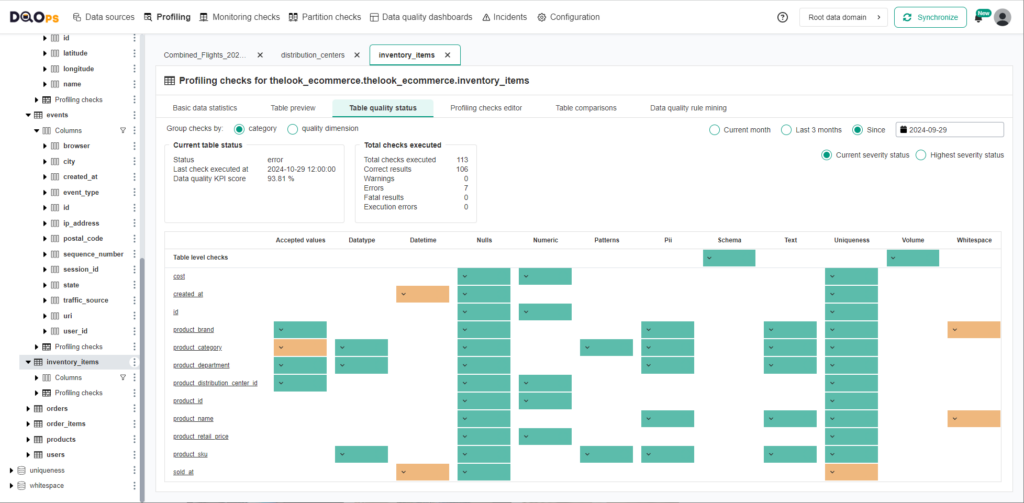

Data profiling often involves using specialized tools that can connect to various data sources and automate the analysis. These tools offer a wide range of built-in data quality checks to identify common data problems. Focus your data profiling efforts on the most critical datasets identified in the previous steps.

Leverage the insights gained from user interviews to configure data quality checks that validate their concerns. For instance, if users reported that 20% of customer phone numbers are in the wrong format, you can create a data quality check to verify this observation and provide concrete evidence of the issue. This approach ensures that your data profiling is aligned with real-world business needs and addresses the specific challenges faced by users.

You can use data quality tools such as DQOps, which provides a very powerful data profiling component that uses AI to configure the most common data quality checks to identify popular data quality issues. The data profiler generates a report that you can share with the data stakeholders.

Calculate the Cost of Poor Data Quality

Once you’ve identified and confirmed data quality issues through profiling, the next step is to estimate their impact on the business. Data quality issues are typically categorized into various dimensions, each with its own potential consequences. Analyzing the impact within each dimension can help you quantify the cost of poor data quality.

Here are some examples of how different data quality dimensions can affect your business:

- Timeliness: Outdated or delayed data can lead to missed opportunities and inaccurate decision-making. For instance, if crucial data isn’t available on time for budget forecasting, it can result in misallocation of resources and missed targets.

- Accuracy: Inaccurate data can have a direct impact on operational efficiency and customer satisfaction. Incorrect phone numbers, for example, can prevent companies from communicating effectively with customers, leading to lost sales and damaged relationships.

- Consistency: Inconsistencies in data across different systems can create confusion and erode trust in data integrity. If the total revenue reported in the data warehouse differs from the revenue recorded in the ERP system, it can lead to financial discrepancies and reporting errors.

- Validity: Invalid records can cause unnecessary work for employees and even lead to regulatory issues if the data is used for compliance purposes. For example, incomplete or inaccurate customer data can complicate KYC (Know Your Customer) compliance efforts in financial services.

By examining the specific data quality issues you’ve uncovered and considering their impact within these dimensions, you can start to quantify the cost of poor data quality. For example:

- If 1% of records used for forecasting are invalid, it could lead to a 1% deviation in the projected budget. If the annual budget for that business domain is $100 million, the potential cost of this data quality issue is $1 million per year.

- If data delays cause a company to miss out on a key sales opportunity worth $50,000 each quarter, the annual cost of poor data timeliness would be $200,000.

By quantifying the financial impact of data quality issues, you can build a stronger case for investment in data quality improvement initiatives.

Estimate the Investment in Data Quality

Now that you understand the potential gains from addressing data quality issues, it’s time to estimate the investment required to achieve those improvements. This involves identifying the necessary resources, outlining the project timeline, and defining the activities involved in fixing the most critical data quality issues.

The most crucial resource in any data quality project is people. Estimate the time commitment needed from both business users, who possess domain expertise, and engineers, who will implement the technical solutions. Based on your previous analysis, you should already know where the data quality issues originate. This will help you determine who needs to be involved:

- Business Application Issues: If the problems stem from a business application, you’ll need to engage software developers to implement additional validation rules or improve data entry processes.

- Data Transformation Errors: If the data is corrupted during transformation, you’ll need data engineers to apply data cleansing logic, correct errors, and reload the affected data into the data lake or warehouse.

In addition to personnel costs, consider the cost of tools. Data quality platforms can automate many aspects of data quality management, from profiling and monitoring to remediation. While commercial platforms can be expensive, other options like DQOps offer a cost-effective alternative.

Finally, factor in the cost of infrastructure. If you choose an on-premise deployment for your data quality platform, you’ll need to account for the hardware and maintenance costs associated with hosting the solution.

By carefully estimating the investment required for your data quality project, you can demonstrate the feasibility of your proposed solution and its potential return on investment.

Build a Compelling Case for Data Quality

With a clear understanding of both the costs and potential benefits of addressing data quality issues, you’re ready to build a compelling use case for your data quality initiative.

Start by focusing on the data domains and assets that generate the highest losses due to poor data quality. Compare these losses with the estimated cost of running a data quality improvement project focused on these critical areas. A strong business case should aim to address problems where the potential gains significantly outweigh the investment. Ideally, target issues where the losses are at least five times greater than the cost of remediation. This provides a buffer for unexpected implementation costs or if the project doesn’t achieve all of its initial goals.

By targeting a crucial business process hindered by data quality issues, the cost of a small-scale data quality improvement project will likely be a fraction of the potential benefits.

To effectively communicate your use case, create a persuasive presentation that includes the following elements:

- Business Domain Overview: Clearly describe the business domain affected by the data quality issues.

- Business Process Impact: Provide a step-by-step explanation of how the identified data quality problems negatively impact the business process.

- Data Quality Issue Description: Detail the specific data quality issue, its scope, and the business processes it affects.

- Financial Impact: Present a clear and quantifiable estimate of the financial losses caused by the data quality issue, supported by data and calculations.

- User Testimonials: Include testimonials from users who can attest to the impact of the data quality problem on their work. Quantify the extra time and effort spent dealing with the consequences of poor data quality.

- Project Cost and Timeline: Outline the estimated cost of implementing data quality improvements and provide a realistic timeline for achieving those improvements.

- Expected Future State: Describe the desired outcome after the project is completed, emphasizing how the identified problems will be avoided in the future.

- Resource Allocation: Document the estimated costs by outlining the required resources and their anticipated level of engagement in the project.

A use case that incorporates these elements has a high chance of success. Before presenting your case, review it with the data stakeholders you interviewed earlier in the process. This ensures alignment and gathers valuable feedback, further strengthening your proposal.

Data quality best practices - a step-by-step guide to improve data quality

- Learn the best practices in starting and scaling data quality

- Learn how to find and manage data quality issues

What is the DQOps Data Quality Operations Center

DQOps is a data observability platform designed to monitor data and assess the data quality trust score with data quality KPIs. DQOps provides extensive support for configuring data quality checks, applying configuration by data quality policies, detecting anomalies, and managing the data quality incident workflow.

DQOps is a platform that combines the functionality of a data quality platform to perform the data quality assessment of data assets. It is also a complete data observability platform that can monitor data and measure data quality metrics at table level to measure its health scores with data quality KPIs.

You can set up DQOps locally or in your on-premises environment to learn how DQOps can monitor data sources and ensure data quality within a data platform. Follow the DQOps documentation, go through the DQOps getting started guide to learn how to set up DQOps locally, and try it.

You may also be interested in our free eBook, “A step-by-step guide to improve data quality.” The eBook documents our proven process for managing data quality issues and ensuring a high level of data quality over time. This is a great resource to learn about data quality.