Last updated: November 13, 2024

How to detect texts exceeding a maximum length using a data quality check

This sample shows how to use data quality checks to detect a text not exceeding a maximum length and view the results on data quality dashboards.

Overview

This example shows how to verify that the length of the text in a column does not exceed the maximum length.

PROBLEM

America’s Health Rankings provides an analysis of national health on a state-by-state basis by evaluating a historical and comprehensive set of health, environmental and socioeconomic data to determine national health benchmarks and state rankings.

The platform analyzes more than 340 measures of behaviors, social and economic factors, physical environment and clinical care data. Data is based on public-use data sets, such as the U.S. Census and the Centers for Disease Control and Prevention’s Behavioral Risk Factor Surveillance System (BRFSS), the world’s largest, annual population-based telephone survey of over 400,000 people.

The measure_name contains measure name data. We want to verify that the length of the string values in this column does not exceed 30 characters.

SOLUTION

We will verify the data of bigquery-public-data.america_health_rankings.ahr using monitoring

text_max_length column check.

Our goal is to verify if the length of the strings in measure_name column does not exceed the set range.

In this example, we will set one threshold level for the check:

- error: range from 0 to 30

If you want to learn more about checks and threshold levels, please refer to the DQOps concept section.

VALUE

If the string length exceed the range 0.0 - 30.0, an error alert will be triggered.

Data structure

The following is a fragment of the bigquery-public-data.america_health_rankings.ahr dataset. Some columns were omitted for clarity.

The measure_name column of interest contains values that shouldn't exceed the length indicated thresholds.

| edition | report_type | measure_name | state_name | subpopulation |

|---|---|---|---|---|

| 2021 | 2021 Health Disparities | Able-Bodied | California | |

| 2021 | 2021 Health Disparities | Able-Bodied | Colorado | |

| 2021 | 2021 Health Disparities | Able-Bodied | Hawaii | |

| 2021 | 2021 Health Disparities | Able-Bodied | Kentucky | |

| 2021 | 2021 Health Disparities | Able-Bodied | Maryland | |

| 2021 | 2021 Health Disparities | Able-Bodied | New Jersey | |

| 2021 | 2021 Health Disparities | Able-Bodied | Utah | |

| 2021 | 2021 Health Disparities | Able-Bodied | West Virginia | |

| 2021 | 2021 Health Disparities | Able-Bodied | Arkansas | Female |

Run the example using the user interface

A detailed explanation of how to start DQOps platform and run the example is described here.

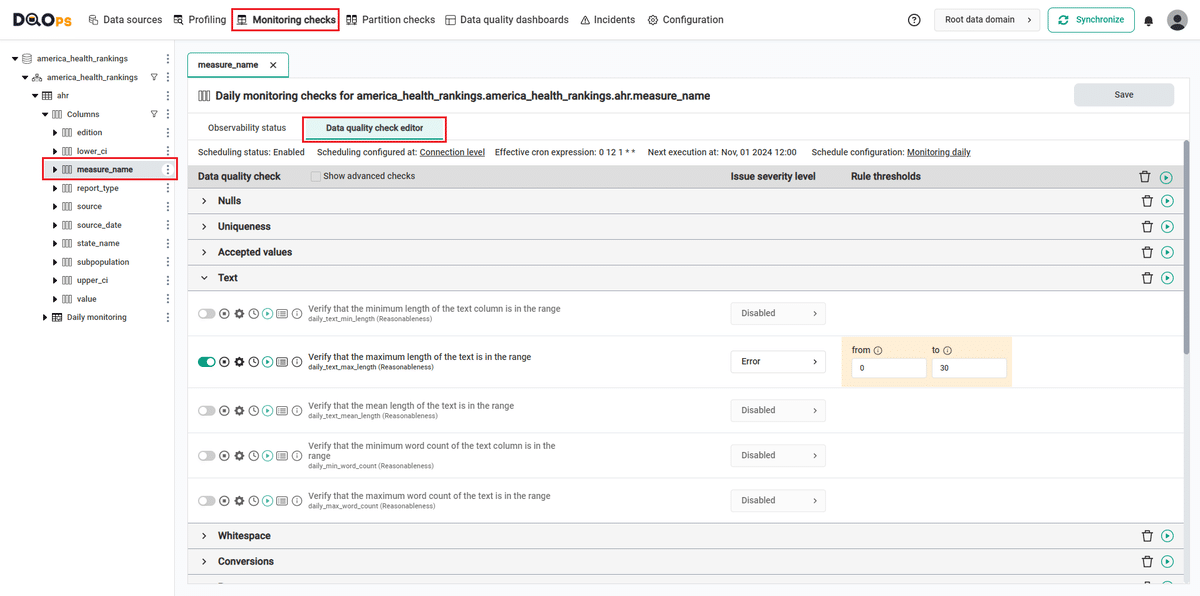

Navigate to a list of checks

To navigate to a list of checks prepared in the example using the user interface:

-

Go to the Monitoring section.

The Monitoring Checks section enables the configuration of data quality checks that are designed for the daily and monthly monitoring of your data source.

-

Select the table or column mentioned in the example description from the tree view on the left.

On the tree view you can find the tables that you have imported. Here is more about adding connection and importing tables.

-

Select the Data quality check editor tab.

This tab displays a list of data quality checks in the check editor. Learn more about navigating the check editor.

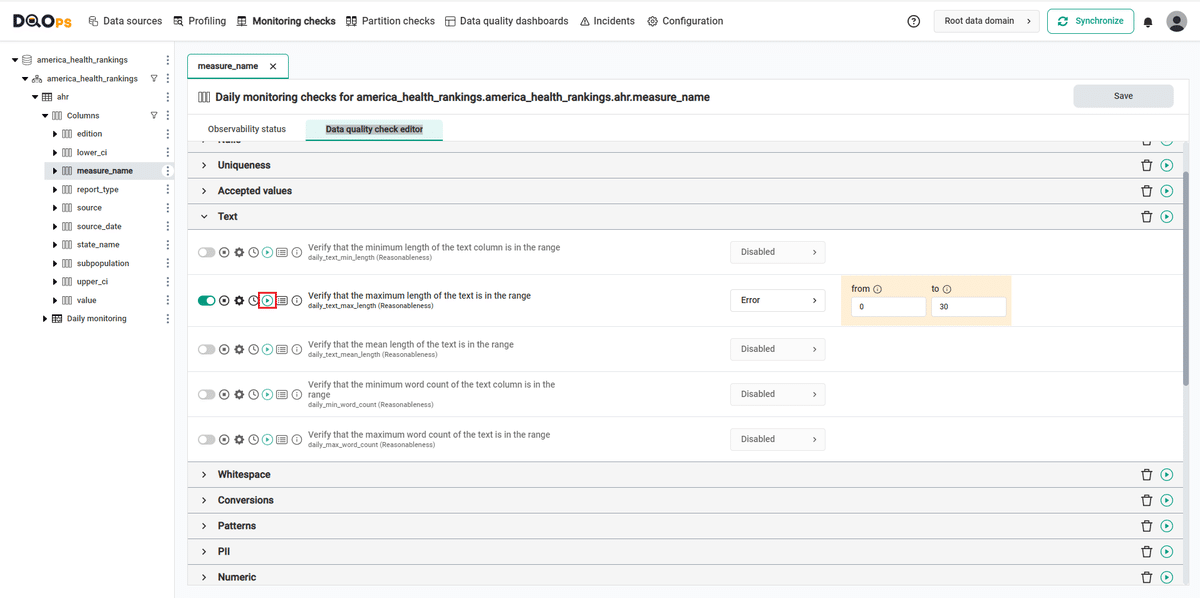

Run checks

Run the activated check using the Run check button.

You can also run all the checks for an entire subcategory of checks using the Run check button at the end of the line with the check subgroup name.

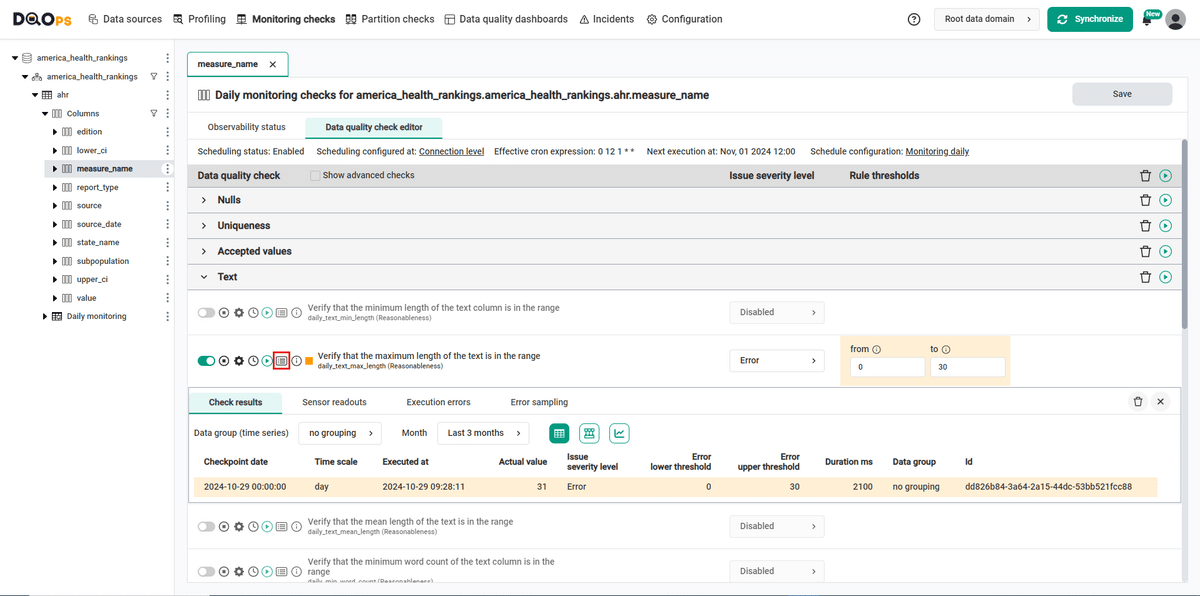

View detailed check results

Access the detailed results by clicking the Results button. The results should be similar to the one below.

Within the Results window, you will see four categories: Check results, Sensor readouts, Execution errors, and Error sampling. The Check results category shows the severity level that result from the verification of sensor readouts by set rule thresholds. The Sensor readouts category displays the values obtained by the sensors from the data source. The Execution errors category displays any error that occurred during the check's execution. The Error sampling category displays examples of invalid values in the column.

The actual value in this example is 31, which is above the range threshold level set in the error field (0 - 30). The check result in an error issue (notice the orange square to the left of the check name).

Synchronize the results with the cloud account

Synchronize the results with your DQOps cloud account using the Synchronize button located in the upper right corner of the user interface.

Synchronization ensures that the locally stored results are synced with your DQOps Cloud account, allowing you to view them on the dashboards.

Change a schedule at the connection level

With DQOps, you can easily customize when checks are run by setting schedules. You can set schedules for an entire connection, table, or individual check.

After importing new tables, DQOps sets the schedule for 12:00 P.M. (noon) every day. Follow the steps below to change the schedule.

-

Navigate to the Data Source section.

-

Choose the connection from the tree view on the left.

-

Click on the Schedule tab.

-

Select the Monitoring daily tab

-

Select the Run every day at and change the time, for example, to 10:00. You can also select any other option.

-

Once you have set the schedule, click on the Save button to save your changes.

By default, scheduler is active. You can turn it off by clicking on notification icon in the top right corner of the screen, and clicking the toggle button.

Once a schedule is set up for a particular connection, it will execute all the checks that have been configured across all tables associated with that connection.

You can read more about scheduling here.

You might also want to check the Running checks with a scheduler example.

YAML configuration file

The YAML configuration file stores both the table details and checks configurations.

In this example, we have set three maximum number thresholds levels for the check:

- warning: 30.0

The highlighted fragments in the YAML file below represent the segment where the monitoring daily_text_max_length check is configured.

If you want to learn more about checks and threshold levels, please refer to the DQOps concept section.

apiVersion: dqo/v1

kind: table

spec:

incremental_time_window:

daily_partitioning_recent_days: 7

monthly_partitioning_recent_months: 1

columns:

edition:

type_snapshot:

column_type: INT64

nullable: true

report_type:

type_snapshot:

column_type: STRING

nullable: true

measure_name:

type_snapshot:

column_type: STRING

nullable: true

monitoring_checks:

daily:

text:

daily_text_max_length:

error:

from: 0

to: 30

state_name:

type_snapshot:

column_type: STRING

nullable: true

subpopulation:

type_snapshot:

column_type: STRING

nullable: true

In this example, we have demonstrated how to use DQOps to verify the reasonability of data in a column. By using the text_max_length column check, we can monitor that the length of the text in a column does not exceed the length in a set range. If it does, you will get an error result.

Next steps

- You haven't installed DQOps yet? Check the detailed guide on how to install DQOps using pip or run DQOps as a Docker container.

- For details on the profile_text_max_length check used in this example, go to the check details section.

- You might be interested in another reasonability check that evaluates that the percentage of false values does not fall below the minimum percentage.

- DQOps allows you to keep track of the issues that arise during data quality monitoring and send alert notifications directly to Slack. Learn more about incidents and Slack notifications.

- The data in the table often comes from different data sources and vendors or is loaded by different data pipelines. Learn how data grouping in DQOps can help you calculate separate data quality KPI scores for different groups of rows.