In today’s interconnected world, organizations heavily rely on internal and external data sharing. This necessitates ensuring data conformity and adherence to agreed-upon data formats. Organizations frequently store data in databases to use in business applications or for analysis on dashboards. Therefore, the validity and proper formatting of this data are crucial.

Data conformity also has implications for storing the data. The software cannot automatically fix unknown data errors, so the valid formats must be enforced and monitored. If the formats are not enforced, incorrectly formatted values can lead to significant issues, including problems with regulatory compliance. For instance, if customer records in a database store “tax id” values in an invalid format, it can violate the law because issuing invoices with incorrect identifiers could result in regulatory violations.

Specifically, many countries, particularly in Europe, mandate that VAT invoices utilize correctly formatted tax identifiers. An incorrect tax identifier is clear proof of inaccurate data. Furthermore, it hinders the ability to verify company names against tax identifiers using public APIs. The call would be rejected due to the format mismatch, making it impossible to fix the data automatically

Table of Contents

Data Conformity Definition

Data conformity means that data follows specific rules about its format, data type, and size, such as the dates provided as “YYYY-MM-DD”. Hence, the date “2024-12-18” conforms to this format, but “December 18th, 2024” does not. These rules ensure that the data is consistent and usable for its intended purpose. This is important because different computer systems and applications often need data in a specific format to work correctly.

Data conformity is one of the data quality dimensions, which are categories used to classify data quality issues. Conformity is a subset of the data validity dimension that validates data to be correct. Conformity is more strict and requires that the format of values is specified upfront to ensure that the values can be converted to a different format or automated in business processes.

Examples of Data Conformity Errors

Text values can store data in various formats, but to ensure accurate processing, these values must adhere to a predefined format. Several common data types require careful attention to ensure conformity:

- Date values: While databases offer native date and timestamp data types, challenges arise when dates are initially provided in a free-form text format. In such cases, it’s essential to enforce a consistent format, such as “YYYY-MM-DD”. For instance, a date like “20/12/2024” would not conform to this standard.

- Numeric values: When text values are intended for conversion to numeric data types, they must represent valid numbers. Acceptable examples include “223” or “-324.4”, while a value like “abc” would violate conformity.

- Identifiers and codes: Organizations and institutions often employ specific formats for tax identifiers, invoice numbers, or product codes. These formats can be defined using patterns, frequently expressed as regular expressions. For example, the value “A-1” conforms to the pattern “[A-Z]+-[0-9]+”, whereas “11-A/3” does not.

How to Find Values in a Wrong Format

Data quality tools, such as DQOps, offer built-in checks to detect common conformity errors. To leverage these tools, you first register the table containing the data as a data source within the tool. Then, you select the specific column to analyze and activate the appropriate check to verify the data format.

Many conformity checks rely on regular expressions to validate data formats. While these expressions follow a standardized format, data quality tools often provide predefined libraries of checks for common formats like dates and numbers, eliminating the need for programming expertise.

For less common formats, some tools offer “data quality rule mining.” This process utilizes machine learning to analyze data and automatically derive the most frequent format. Such tools can effectively identify the correct formatting for tax identifiers or product codes, so you don’t need to learn how to test it.

Upon verifying conformity and identifying errors, you can share samples of these errors with data providers. This facilitates clear communication and allows you to request that they provide data in a valid format, thereby improving overall data quality.

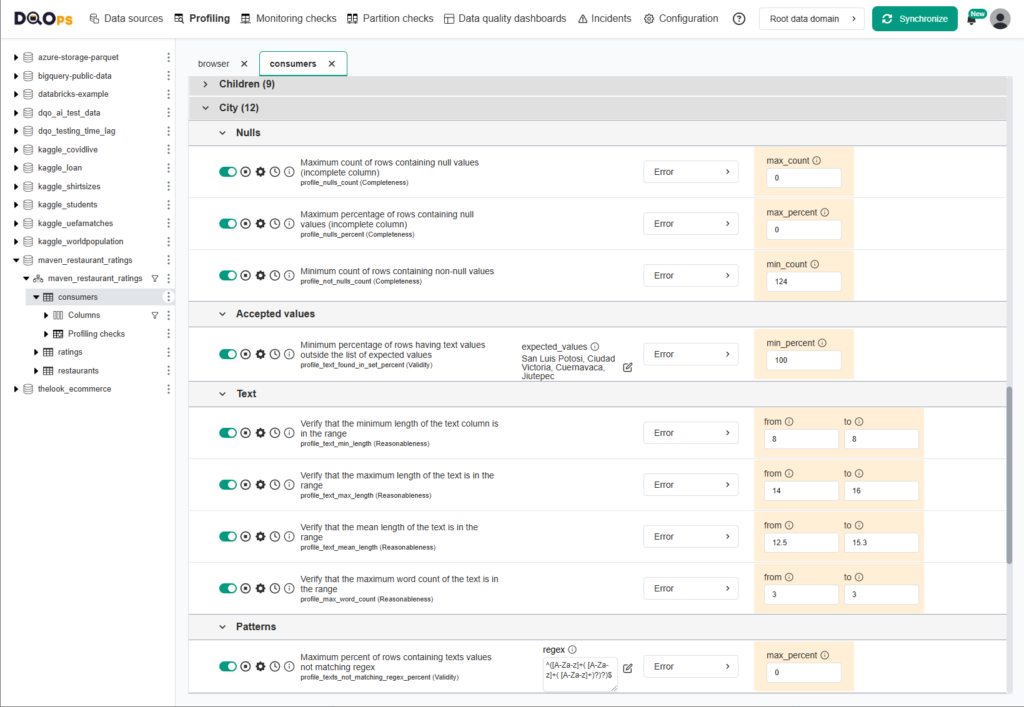

The following screenshot of the rule mining screen in DQOps shows the rules the tool detected and wants to verify.

Best Practices for Monitoring Conformity of Data

Maintaining data conformity over time requires a proactive approach. The following best practices can help ensure ongoing compliance:

- Utilize data quality tools with automated rule mining: Selecting tools that support automated rule mining simplifies the process of defining data formats for validation. This automation reduces manual configuration and allows the tool to learn and adapt to evolving data patterns.

- Share error samples with data stakeholders: When non-conformant values are detected, exporting a few example rows and sharing them with data source owners or users who entered the incorrect values can be highly effective. Concrete examples facilitate understanding and encourage better data entry practices.

- Continuously monitor data conformance with data observability tools: Implementing data observability tools enables regular and automated testing of data quality. These tools can verify conformity on a daily basis and promptly notify data owners of any deviations, allowing for timely intervention and remediation.

Data quality best practices - a step-by-step guide to improve data quality

- Learn the best practices in starting and scaling data quality

- Learn how to find and manage data quality issues

What is the DQOps Data Quality Operations Center

DQOps is a data quality and observability platform designed to monitor data and assess the data quality trust score with data quality KPIs. DQOps provides extensive support for configuring data quality checks, applying configuration by data quality policies, detecting anomalies, and automatically finding patterns using machine learning. DQOps supports over 150+ data quality checks, which cover all popular formats used in data conformance testing.

DQOps is a platform that combines the functionality of a data quality platform to perform the data quality assessment of data assets. It is also a complete data observability platform that can monitor data and measure data quality metrics at a table level to measure its health scores with data quality KPIs.

You can set up DQOps locally or in your on-premises environment to learn how DQOps can monitor data sources and ensure data quality within a data platform. Follow the DQOps documentation, go through the DQOps getting started guide to learn how to set up DQOps locally, and try it.

You may also be interested in our free eBook, “A step-by-step guide to improve data quality.” The eBook documents our proven process for managing data quality issues and ensuring a high level of data quality over time. This is a great resource to learn about data quality.