The example will show how easy it is to start monitoring data quality with DQOps by detecting empty or incomplete tables using data quqlity checks.

The standard installation of the DQOps platform includes a set of examples, which can be found in the `example/` directory.

These examples use openly available datasets from Google Cloud. The following example describes how to verify that the number of rows in a table does not exceed the minimum accepted count.

Here you can check the full list of examples.

Prerequisites

To use the examples you need:

- Installed DQO

- A BiqQuery service account with BigQuery > BigQuery Job User permission. You can create a free trial Google Cloud account here.

- A working Google Cloud CLI if you want to use Google Application Credentials authentication.

After installing Google Cloud CLI, log in to your GCP account by running:

gcloud auth application-default login

Problem

America’s Health Rankings analyzes national health on a state-by-state basis. It evaluates a historical and comprehensive set of health, environmental, and socioeconomic data to determine national health benchmarks and state rankings.

The platform analyzes more than 340 measures of behaviors, social and economic factors, physical environment, and clinical care data.

Data is based on public-use data sets, such as the U.S. Census and the Centers for Disease Control and Prevention’s Behavioral Risk Factor Surveillance System (BRFSS), the world’s largest annual population-based telephone survey of over 400,000 people.

Here is a table with some sample customer data from the bigquery-public-data.america_health_rankings.ahr dataset. Some columns were omitted for clarity.

For any database analysis, empty or incomplete data can significantly hinder analysis and lead to inaccurate conclusions.

In this example, we will detect that the table is not empty and meet the size requirement.

Solution

DQOps offers built-in data quality policies that address common issues like table emptiness. You will run the row_count table check to validate if the table is not empty.

Once we confirm the table is not empty, the DQOps rule miner engine can help us automatically set higher thresholds to monitor if the table size meets our size requirements.

Value

If the number of rows in a table falls below a set threshold, DQOps will create an incident and can send a notification to relevant stakeholders.

Data quality best practices - a step-by-step guide to improve data quality

- Learn the best practices in starting and scaling data quality

- Learn how to find and manage data quality issues

Running the example

To run the examples using the graphical interface, follow the steps below.

- Go to the directory where you installed DQO and navigate to

examples/data-completeness/detect-empty-tables-bigquery. Run the commandrun_dqoin Windows or./run_dqoin MacOS/Linux. - Create DQO `userhome` folder. After installation, you will be asked whether to initialize the DQO “userhome” folder in the default location. Type Y to create the folder. The userhome folder locally stores data such as sensor and checkout readings, as well as data source configurations.

- Login to DQO Cloud.

To use DQO features, such as storing data quality definitions and results in the cloud or data quality dashboards, you must create a DQO cloud account. After creating an userhome folder, you will be asked whether to log in to the DQO cloud. After typing Y, you will be redirected to https://cloud.dqops.com/registration, where you can create a new account, use Google single sign-on (SSO), or login if you already have an account. During the first registration, a unique identification code (API Key) will be generated and automatically passed to the DQO application. The API Key is now stored in the configuration file. - Open the DQO User Interface Console (http://localhost:8888).

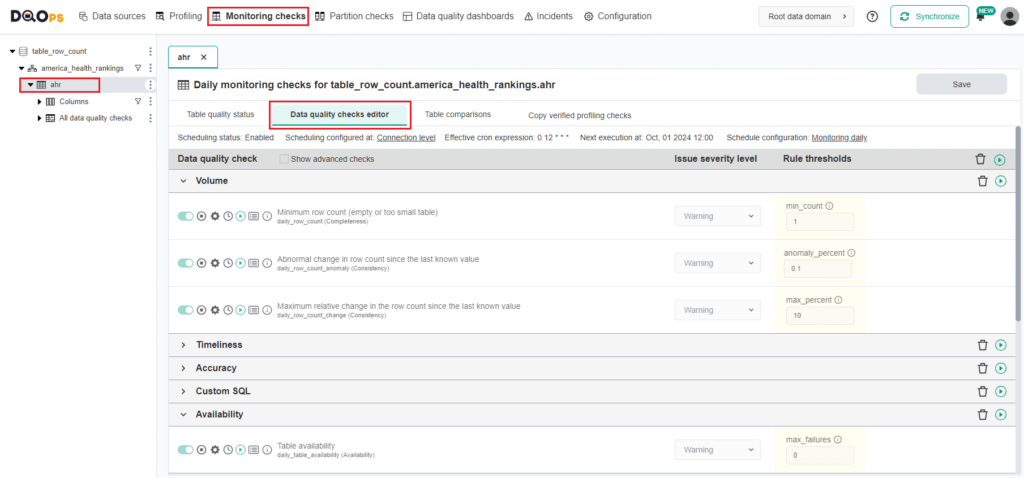

- Click on the Monitoring checks section.

- Select the

ahrtable from the tree view on the left. - Click on the Data quality checks editor tab.

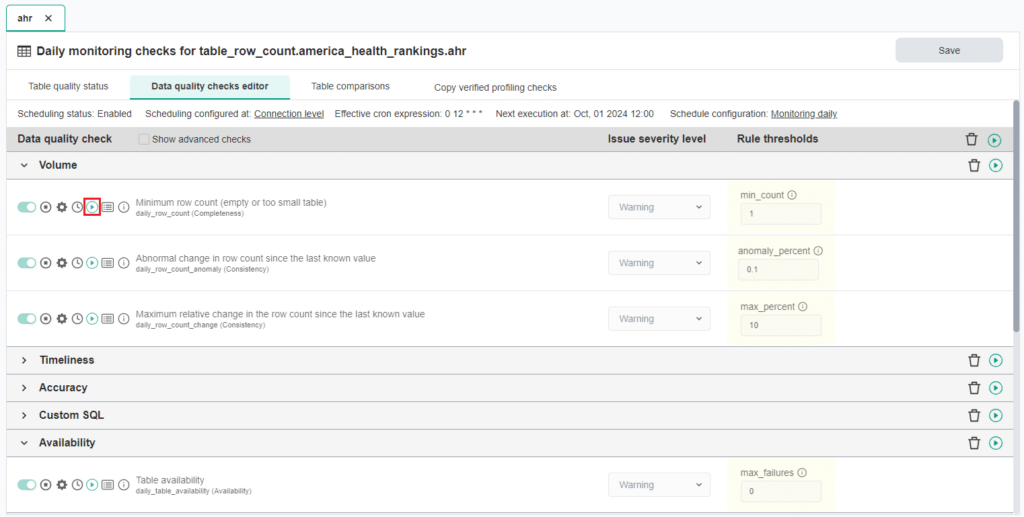

- Run the activated row_count check using the Run check button.

You can also run all table checks using the Run check button located in the top right corner of the table.

- Review the results by opening the Check details button.

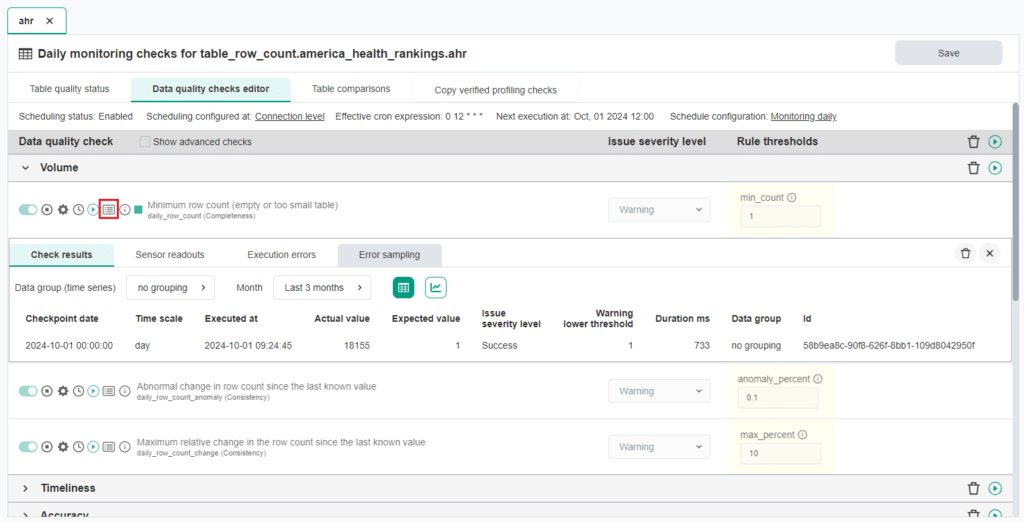

- You should see the results as the one below.

The actual value of rows in this example is 18155, which is above the

min_countrule threshold warning severity level of 1. The check gives a correct result, providing assurance that your table is not empty.

- After confirming that your table is not empty, you can set higher thresholds to ensure that the table meets size requirements. Setting threshold can be done automatically using the rule miner engine.

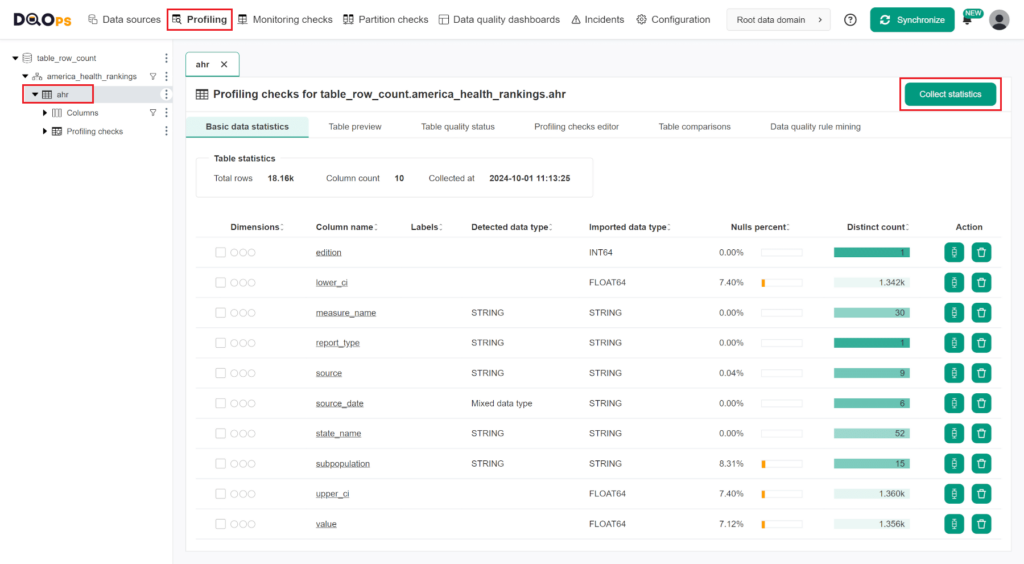

- Navigate to the Profiling section.

- Select the

ahrtable from the tree view on the left. This will open the Basic data statistics tab. - Click on the Collect statistics. This will collect the basic statistics for the table and all columns, as shown on the screen below.

Learn more about basic statistics in the Working with DQOps section.

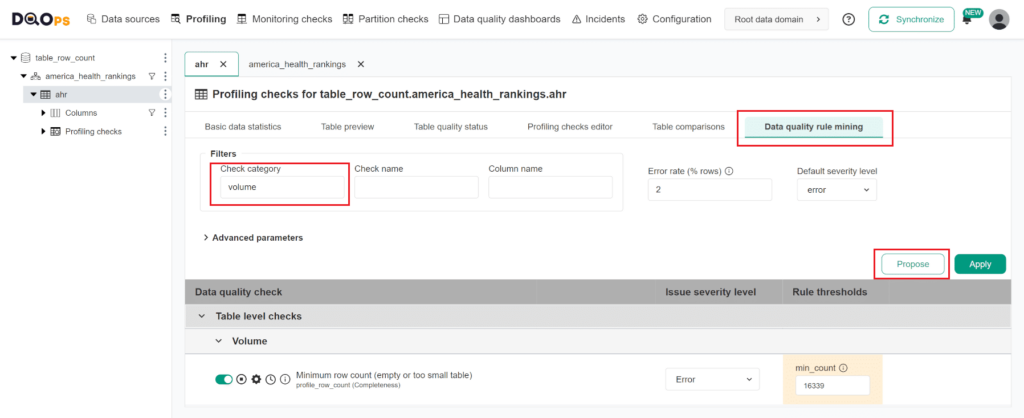

After collecting the basic statistics, the rule mining screen can propose a configuration of data quality checks. To navigate to the rule miner, click on the Data quality rule mining tab in the Profiling section.The rule mining screen allows you to view and select the automatically proposed configuration of data quality checks. DQOps proposes a list of data quality checks instantly upon entering the rule mining screen.You can use filters to narrow down the list of data quality check proposals. Filter the results to include only checks from the volume category by entering “volume” in the Check category input field.

You will see a proposition of profile_row_count check configuration that will raise an error if the minimum count of rows fails below 16,339. Pressing the Apply button saves the configuration of data quality checks and their rules. A popup window will appear, notifying you that the checks have been activated and that you can run the activated check by clicking on the Confirm button.

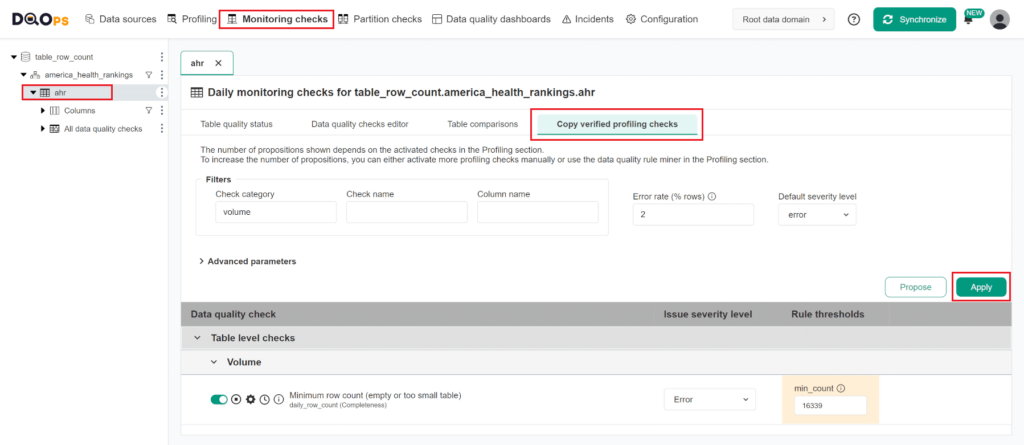

To start monitoring data quality using a newly configured check we need to copy the configuration of the row_count check in the Profiling section to the Monitoring checks:

- Navigate to the Monitoring checks section.

- Select the table from the tree view on the left.

- Click on the Copy verified profiling checks tab.

- Click the Apply button.

- Click the Confirm button in the popup to run configured checks.

Conclusion

The example showed how easily you can start monitoring the quality of your data with DQOps.