The Shifting Landscape of Data Quality in 2024: Trends You Need to Know

1. Data Quality: From Backroom to Boardroom

For too long, data quality has been an afterthought, relegated to the technical IT department. This is changing fast. Businesses are recognizing that high-quality data is a strategic asset, as vital to success as financial resources or skilled personnel. Here’s how this shift is manifesting:

- C-suite and executive sponsorship: CEOs, CFOs, and other executives are actively driving data quality initiatives. They understand that inaccurate reports, misleading forecasts, and compliance issues caused by poor data can seriously hinder business growth and reputation.

- Business KPIs tied to data quality: Companies are incorporating data quality metrics into their performance dashboards. This goes beyond just counting errors – it could involve tracking improvements in decision-making accuracy, the reduction of customer complaints related to data issues, or faster time to insights.

- Data quality as a competitive differentiator: In a world where many organizations have access to similar technology and information, those with the cleanest, most reliable data gain an operational and strategic edge. The ability to make quick, confident decisions based on high-quality data can be a major competitive advantage.

- Cross-departmental collaboration: No single team owns data quality. Sales, marketing, finance, product development, and operations all have a stake in the problem (and its solution). This breakdown of silos leads to better communication and shared data quality goals.

This shift elevates data quality to a core business function, ensuring that data-driven insights are reliable, actionable, and aligned with the organization’s strategic goals.

In today’s data-driven world, it’s not just about the quantity of data your organization collects but also about its quality. Bad data leads to bad decisions, missed opportunities, regulatory headaches, and a whole lot of frustration. Fortunately, the field of data quality is evolving rapidly, and these trends are shaping the future:

2. Data Observability: Your Data's Early Warning System

Borrowing concepts from observability practices in software development (DevOps), data observability provides a comprehensive and proactive approach to monitoring the health of your data and data pipelines. Here’s how it goes beyond traditional monitoring:

- Beyond “Is it working?” Traditional monitoring typically tells you if something has broken. Data observability digs deeper, focusing on metrics like:

- Freshness: How up-to-date is your data, and are updates arriving on schedule?

- Distribution: Does the data’s distribution match expectations (e.g., no unexpected spikes or dips in key metrics)?

- Volume: Is the expected amount of data flowing in and out of the system?

- Completeness: Is the data complete, all key columns contain values?

- Schema: Are there unexpected changes to data structure or field definitions?

- Real-time alerts Data observability tools can trigger alerts when these metrics deviate from the norm, allowing teams to address anomalies before they snowball into major problems. Learn more about incidents and alerts in the DQOps platform.

- Root cause analysis: It’s not just about identifying that something is wrong, but why. Data observability allows you to track issues back through the data pipeline, pinpoint the source, and determine whether the problem lies in upstream systems, code errors, or configuration issues. Read more about root cause analysis in our blog.

- Preventing Downstream Impacts: Catching errors early means you can potentially prevent them from contaminating analytics dashboards, messing up customer records, or causing incorrect AI predictions.

- Data Observability vs. Data Monitoring Think of data monitoring as checking the oil level in your car’s engine. Data observability is like having a full array of sensors and gauges monitoring your car’s performance in real time, alerting you of potential problems before they leave you stranded on the side of the road. You can read more about the differences between data observability and data monitoring in our blog post.

Data observability is crucial for complex data environments, as it enables data teams to maintain trust in their data and react quickly to evolving data needs.

3. Everyone's in the Data Quality Game

Traditionally, data quality was seen as the exclusive responsibility of IT teams or dedicated data engineers. This is changing rapidly towards a more democratized approach where business users play a proactive role. Here’s why this is happening:

- Business users know their data best: The people who work with data daily (customer service reps, sales teams, analysts) often have the deepest understanding of how it’s used, the common errors they encounter, and the impact those errors have on their work.

- Self-service tools lower the technical barrier: Modern data quality platforms often have intuitive, user-friendly interfaces. This means business users can participate in basic profiling, spot inconsistencies, suggest corrections, and collaborate on data improvement without needing extensive technical expertise.

- Empowerment leads to ownership: When business users are directly involved in improving data quality, they develop a sense of ownership and accountability. They are more likely to maintain high standards and less likely to tolerate shortcuts that lead to inaccurate data.

- Data literacy boost: Participation in data quality initiatives helps business users become more data-savvy. They improve their understanding of data structures, the importance of proper formatting, and common sources of error.

- Faster feedback loops: Instead of waiting on data teams to identify and fix issues, business users can directly flag anomalies or potential problems. This leads to shorter error correction cycles and less downstream impact.

Note: This doesn’t eliminate the need for specialized data quality teams. Rather, it creates a collaborative model where business users act as “eyes on the ground” while data professionals focus on complex tasks, tool development, and system-wide improvements.

4. AI and ML: Your Data Quality Superstars

Artificial intelligence and machine learning are transforming how we approach data quality. Far from replacing human expertise, AI and ML offer a powerful toolkit that enhances and scales traditional data quality processes. Here’s how:

- Pattern recognition at scale: AI algorithms can effortlessly analyze vast amounts of data, identifying patterns and anomalies that would be difficult or tedious for humans to spot. This includes detecting duplicates, format inconsistencies, missing values, and unusual outliers.

- Smarter anomaly detection: AI models can learn to distinguish between “normal” variations and genuine data quality issues. This reduces false positives and lets data teams focus on the most critical problems.

- Automated data cleansing suggestions: Beyond identifying errors, AI can recommend corrections or cleansing fixes. For example, suggesting standardized address formats or filling in missing values based on previous entries.

- Root-cause analysis assistance: ML models can be trained to understand relationships between different data fields and events. This helps trace data quality issues back to their source, supporting the drive to fix the underlying problem, not just the symptom.

- Adaptive learning: AI-powered data quality systems continuously learn and improve over time. As new data flows in and users provide feedback, the models become more accurate and efficient at identifying and resolving issues.

Important Note: The success of AI in data quality depends on:

- High-quality training data: AI models learn from examples, so it’s important to feed them reliable and well-labeled data.

- Human oversight and guidance: AI should be seen as a powerful tool, not a magic bullet. Data experts are still crucial for interpreting AI’s output, making final decisions, and refining models.

AI and ML in data quality aren’t about eliminating human involvement, but rather empowering teams to work smarter, faster, and with a greater focus on strategic tasks.

5. The Cloud Democratizes Data Quality

Cloud-based data quality platforms are breaking down barriers and making sophisticated tools and resources accessible to businesses of all sizes. Here’s how the cloud is changing the data quality landscape:

- No upfront infrastructure investment: Traditionally, implementing enterprise-grade data quality tools required significant hardware purchases, software installation, and ongoing maintenance. Cloud solutions eliminate those hurdles, operating on a subscription model.

- Scalability on demand: Cloud platforms let you easily adjust your data quality resources as your needs evolve. If you have a sudden influx of data, need to launch a new data quality initiative, or onboard additional users, the cloud provides flexibility.

- Faster innovation: Cloud providers constantly update and improve their data quality offerings. This means organizations benefit from the latest features and techniques without lengthy in-house development cycles.

- Focus on core competencies: By outsourcing a degree of data quality infrastructure and tool management to the cloud, internal IT teams can focus on strategic projects and support, rather than low-level maintenance.

- Lower barriers to entry for small and medium-sized businesses: Advanced data quality capabilities were once reserved for large enterprises with hefty budgets. Cloud solutions level the playing field, letting smaller companies access powerful tools that can improve their competitiveness.

- Easier collaboration: Cloud-based platforms make it easier for distributed teams or partners to collaborate on data quality initiatives, sharing insights and working towards common goals.

Important Considerations:

- Security and Compliance: When evaluating cloud-based data quality solutions, it’s critical to carefully assess the provider’s security measures and their ability to meet regulatory compliance standards relevant to your industry.

- Integration: Consider how the cloud data quality platform will integrate with your existing data systems (data warehouses, CRM systems, etc.). Seamless integration is important to maintain efficient workflows.

The cloud is democratizing data quality, making it a practical reality for organizations that may have previously struggled to implement robust data quality practices.

6. Getting to the Root of the Problem

Data quality isn’t just about fixing errors as they pop up. For lasting improvements, it’s vital to understand why those errors occur in the first place. Here’s why a root-cause analysis approach is crucial:

- Preventing recurrence: If you only focus on the surface-level error, the same problem (or a similar one) is likely to keep happening. Root-cause analysis helps you identify and address the underlying issue so it doesn’t keep causing data quality headaches.

- Systemic vs. human errors: Root-cause analysis helps distinguish between errors caused by human mistakes and those stemming from systemic problems. This guides appropriate solutions – is it additional training that’s needed, or a process change, or perhaps a faulty data entry system?

- Examples of root causes:

- Poorly designed input forms: If a data entry form doesn’t have clear field definitions or validation rules, it will inevitably lead to inconsistent, messy data.

- Manual processes prone to typos: Reliance on spreadsheets or manual data copying introduces risks of human error.

- Lack of synchronization between systems: When data exists in multiple systems without proper updates and reconciliation, data quality inevitably suffers.

- Outdated or missing data standards: If the organization lacks clear guidelines on things like date formats or address structures, you’ll end up with incompatible data.

- Methods for root-cause analysis: Techniques like the “5 Whys” (asking ‘why’ repeatedly to drill down to the cause) and fishbone diagrams (visually mapping potential causes) are useful tools.

Benefits of Root-Cause Analysis in Data Quality:

- Long-term data quality improvement: By addressing root causes, you establish systems and processes that produce more reliable data in the future.

- Reduced firefighting: Teams spend less time constantly fixing similar errors and more time on proactive data quality strategies.

- Cost savings: Preventing errors upstream often yields significant cost savings compared to continuously cleaning up messy data.

- Improved decision-making: When you can trust the data, you’re less likely to make choices based on faulty assumptions, mitigating business risks.

Root-cause analysis turns data quality into a proactive effort, driving lasting improvements rather than short-term patches.

7. Data Governance: The Foundation of It All

Data governance is the framework of policies, standards, processes, roles, and responsibilities that define how an organization manages, uses, and protects its data assets. Think of it as the rulebook that ensures everyone is playing by the same data quality rules. Here’s why it’s so crucial:

- Consistency across the organization: Data governance defines clear standards for data formats, definitions, quality metrics, and how data flows through the organization. This prevents data silos and ensures that everyone is working with the same understanding of what the data means and how reliable it is.

- Accountability and ownership: Data governance outlines who is responsible for different data sets, who is authorized to access and modify them, and who should be involved in resolving data quality issues. This sense of ownership promotes accountability and helps ensure data is treated as a valuable asset.

- Regulatory compliance: Data governance helps organizations meet complex data privacy and security regulations like GDPR, CCPA, and industry-specific standards. Consistent policies and processes make it easier to demonstrate compliance.

- Improved trust: When data is well-governed, users throughout the organization have more confidence in its accuracy and reliability. This leads to better adoption of analytics tools, more informed decision-making, and increased trust in data-driven insights.

- Enabling data quality initiatives: Data governance isn’t just about rules. It also encompasses the processes and technologies that support data quality. This includes tools for data profiling, cleansing, monitoring, and lineage tracking.

Key Components of Data Governance:

- Data policies: Formal, high-level statements about how data should be managed, protected, and used.

- Data standards: Detailed definitions of data elements, formats, allowable values, and quality thresholds.

- Data ownership and stewardship: Assigning clear roles for data owners (often business-side decision-makers) and data stewards (technical experts responsible for day-to-day management).

- Processes for data lifecycle management: Defining processes for data creation, collection, storage, usage, and archival/deletion.

- Metrics and reporting: Establishing ways to measure data quality, track progress, and communicate performance to stakeholders.

Data governance is an ongoing effort, not a one-time project. It requires strong leadership, cross-functional collaboration, and continuous adaptation as an organization’s data needs and the regulatory environment evolve.

Why You Should Care

These trends offer tangible benefits for any data-driven business:

- Smarter decisions based on good data

- Amazing customer experiences powered by accurate information

- Compliance with strict privacy regulations

- Streamlined operations with less waste and rework

- Confidence in the results of your analytics and AI models

Meet DQOps Data Quality Operations Center

We have created a comprehensive data quality platform that follows the trends in data quality. It is the only platform designed to streamline the communication between data engineering teams, data science teams and data quality teams.

Our main principle was to make a data quality platform that works for all stakeholders and fosters collaboration.

- DevOps for data quality: DQOps is a platform for ensuring the quality and reliability of data throughout its lifecycle, just like DevOps focuses on streamlining software development and delivery. DQOps is tailored with DevOps teams in mind. Learn more about the features of DQOps.

- Collaboration is key: DQOps emphasizes collaboration between data engineers, data scientists, business analysts, data operations, data quality teams, and other stakeholders to maintain trustworthy data within an organization.

- Integral part of DataOps: DQOps integrates well with data platforms becoming a crucial component of a broader DataOps strategy, which aims to increase the agility, efficiency, and value derived from data pipelines.

This short summary of core DQOps features gives a glimpse of our data quality management approach:

- Extensibility: Define a standard set of custom data quality checks and hand over the data quality platform to the data operations team.

- Data quality KPIs: Measure data quality with reliable KPIs and share them with all stakeholders and business sponsors.

- DevOps friendly: Configure data quality checks and rules in YAML files, edit with full code completion in Visual Studio Code, and version the files in Git.

- Data observability: Detect anomalies and common data quality issues related to null values, duplicate values, and outliers.

- Data quality: Go beyond standard data observability. Use over 150 data quality checks as part of regular data quality monitoring to detect business-relevant data quality issues your end users want to monitor.

DQOps’ ability to provide value for both technical and non-technical teams helps solve the biggest challenges in data management.

- Poor data, poor decisions: Inaccurate, inconsistent, or incomplete data can lead to wrong business decisions, damaged customer trust, and operational inefficiencies. The 150+ data quality checks in DQOps detect all common data quality issues. DQOps can activate data quality checks automatically, which instantly provides the benefits of Data Observability. The data operation teams can also use the user interface to review the recent issues and tweak the data quality rules to detect more complex data quality issues.

- Growing data complexity: The increasing volume, speed, and variety of data make it challenging to maintain quality without a systematic approach. That is why DQOps supports extensibility. You can customize the 150+ data quality checks or define your own. DQOps will show your custom checks in the user interface that is easy to use for the support teams and less-technical personnel leading to a true data literacy when every data stakeholder can run data quality checks without accidentally corrupting data pipelines.

- Regulatory compliance: In certain industries, such as healthcare and finance, measuring data quality with quantitative metrics and KPIs is necessary to meet regulatory requirements related to data accuracy and reporting. DQOps sets up a SaaS data quality data warehouse to store all data quality metrics. Our 50+ built-in data quality dashboards provide a complete view of the current data quality status. The data quality dashboards are also customizable, allowing you to create data domain-specific views that will be shared with business sponsors, external partners, and other stakeholders. DQOps is designed to measure data quality using data quality KPIs, which are reliable metrics that verify the truthfulness of data sources.

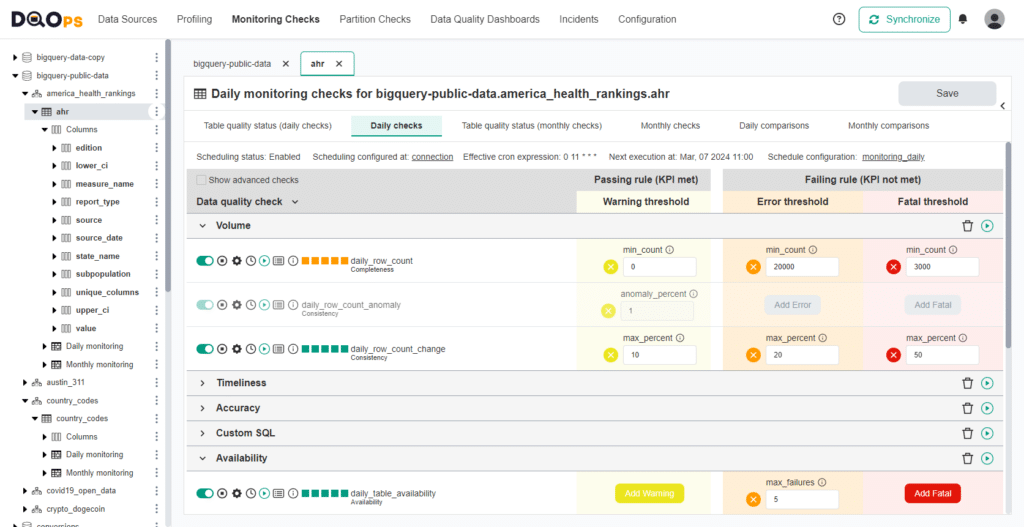

The following screenshot shows the data quality check editor of DQOps.

Please review the DQOps documentation for more examples. Our guides for detecting common data quality issues provide solutions for common errors.

The Future of Data Quality

Data quality is a journey, not a destination. Stay ahead of the curve by actively embracing continuous improvement, exploring the latest tools, and ensuring strong cross-team collaboration.

Please check out the DQOps documentation for details. You can install DQOps right now by downloading it from PyPI or starting a DQOps docker image.