DQOps platform overview

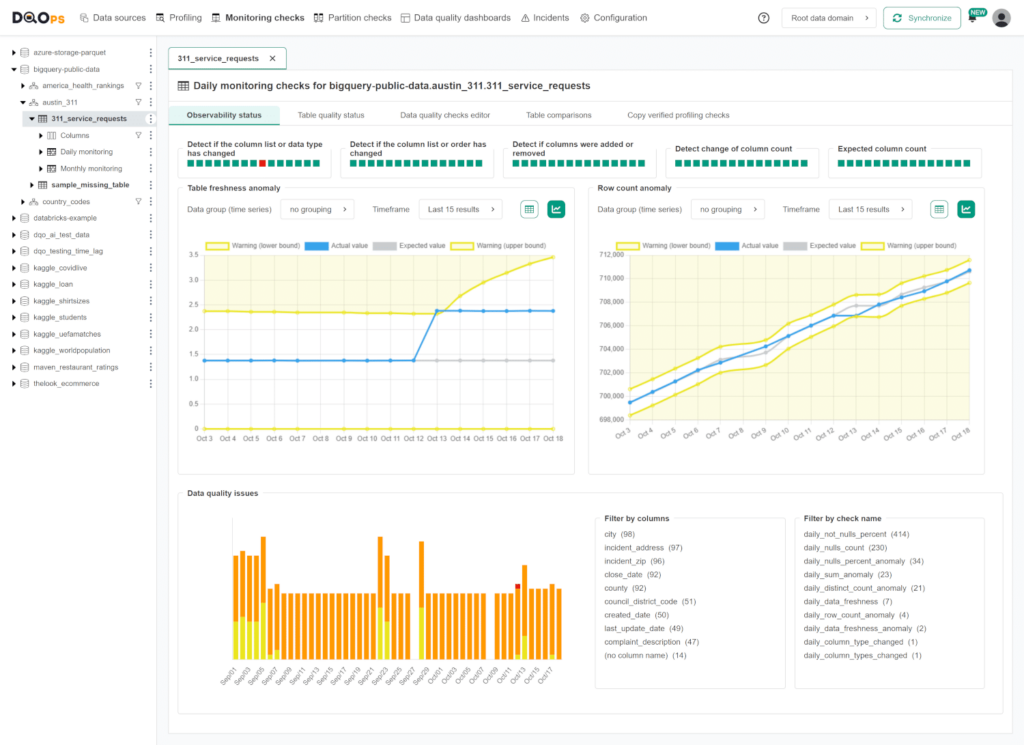

End-to-end data quality monitoring

Data engineers need a data quality platform that is integrated into data pipelines. When the data platform matures from development to production, the non-coding operations team and business users will watch data quality, but they need a different interface.

DQOps is a data quality platform for the whole data platform lifecycle, from new data source assessment to automating data quality monitoring.

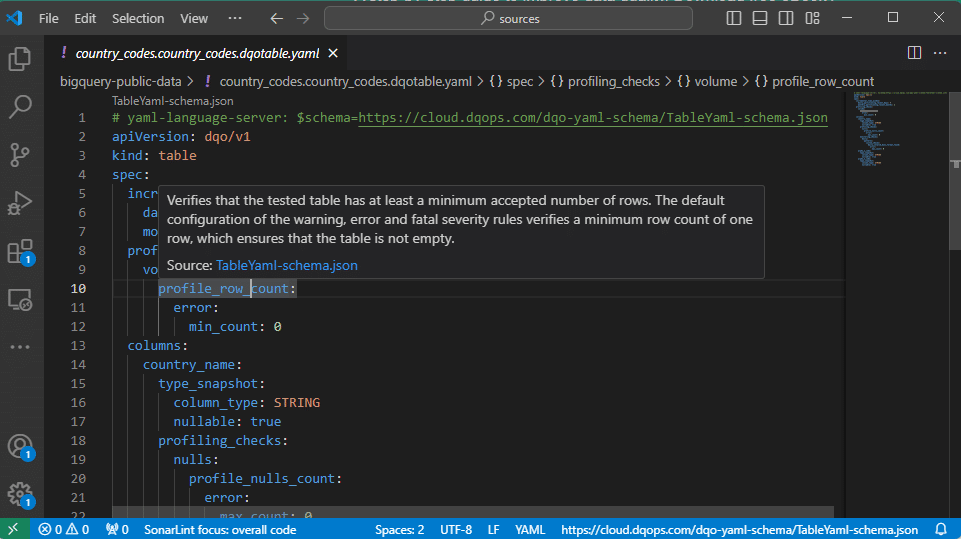

DQOps stores the configuration in YAML files and provides a user interface for non-coding users.

Multiple interfaces

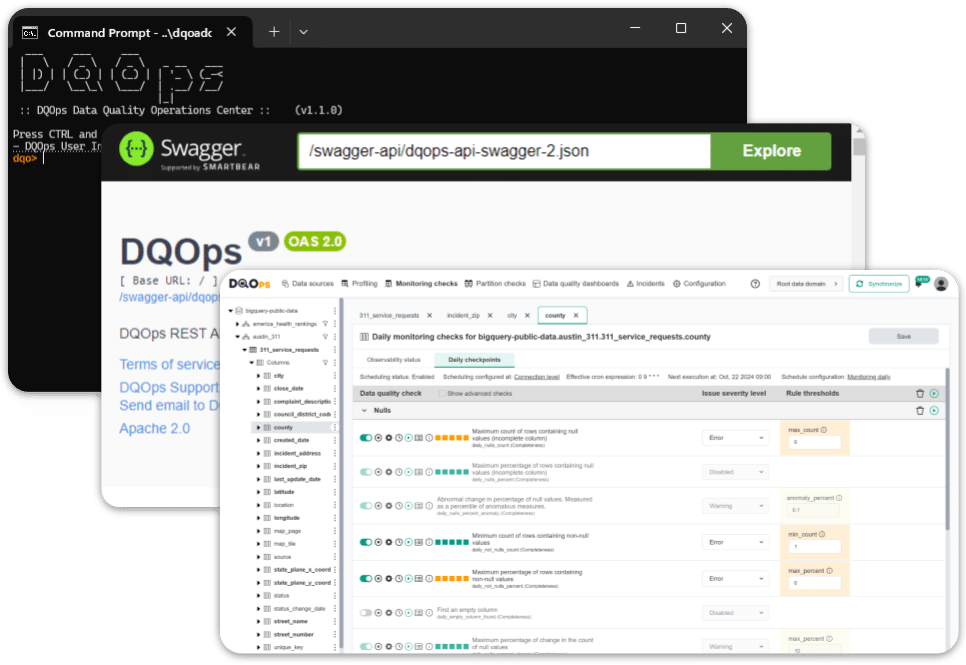

DQOps provides multiple interfaces for every type of user.

- Use the user interface locally to configure checks and review table statuses.

- Integrate data quality checks into data pipelines by calling Python or REST client.

- Power users can configure data quality checks at scale using a command line.

DQOps provides multiple interfaces for every type of user.

- Use the user interface locally to configure checks and review table statuses.

- Integrate data quality checks into data pipelines by calling Python or REST client.

- Power users can configure data quality checks at scale using a command line.

Manage Data Quality

Manage Data Quality

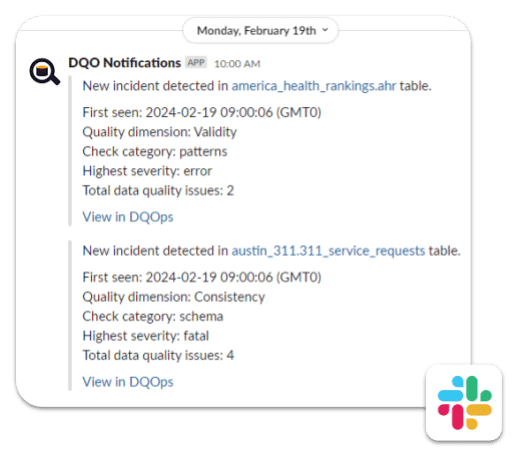

Progress smoothly from new data sources assessment to monitoring and managing data quality incidents.

- Assess new data with 150+ built-in data quality checks.

- Activate data observability by policies.

- Manage data quality incidents workflows and notifications.

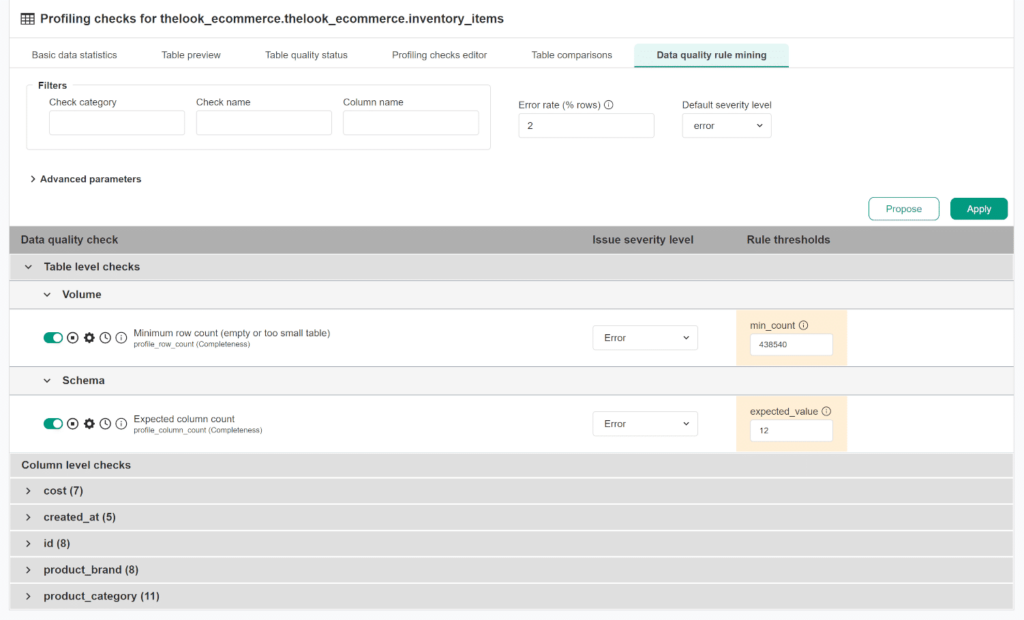

Automated rule mining

Save time and effort by allowing DQOps to automatically set up data quality checks using the rule mining engine.

- Configure checks to identify the most common data issues.

- Set up checks that will pass with the current data to ensure integrity for future data.

- Customize rule thresholds as needed.

Save time and effort by allowing DQOps to automatically set up data quality checks using the rule mining engine.

- Configure checks to identify the most common data issues.

- Set up checks that will pass with the current data to ensure integrity for future data.

- Customize rule thresholds as needed.

Connect data source and automatically monitor the most common data quality issues

Schema changes

Monitor your data warehouse for any schema changes, such as missing columns, data type modifications, or column rearrangements.

Completeness

Detects empty or too-small tables. Monitor for data completeness issues related to the null values in a dataset.

Anomalies

Detects unexpected values outside the regular range. Identify new minimum and maximum values or detect changes in typical values.

Timeliness

Monitor table freshness (how old the data is) and staleness (when the data was loaded for the last time).

Code first

Proactively manage data quality without disrupting your existing workflows.

- Integrate data quality checks into data pipelines by calling DQOps.

- Run data quality checks from data pipelines using a Python client.

- Automate any operation visible in the user interface.

Proactively manage data quality without disrupting your existing workflows.

- Integrate data quality checks into data pipelines by calling DQOps.

- Run data quality checks from data pipelines using a Python client.

- Automate any operation visible in the user interface.

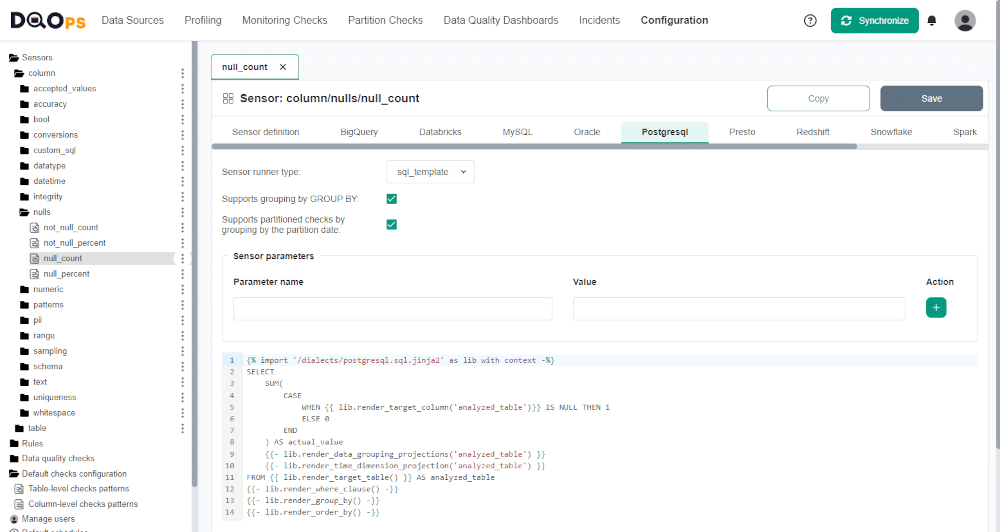

Custom checks

Custom checks

The DQOps platform is fully extensible. You can turn any SQL into a templated data quality check.

- Detect any data quality issues that business users want.

- Apply machine learning to detect anomalies.

- Any custom checks you create will be visible in the user interface.

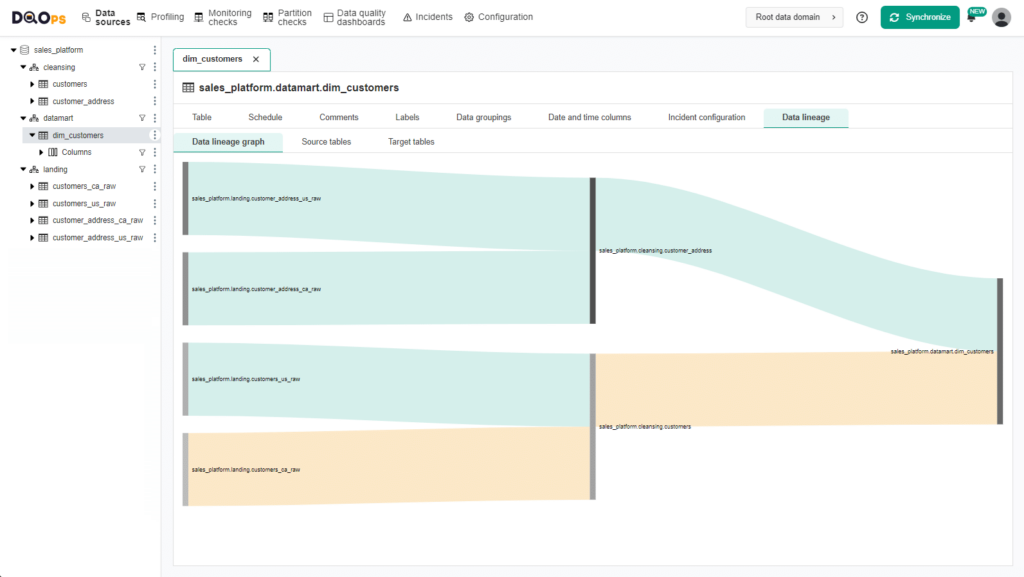

Data lineage

Quickly identify the root cause of data quality issues with DQOps’ data lineage capabilities.

- Easily identify the origins of quality issues.

- Load of existing data lineages from Marquez without any manual setup required.

- Automatically identify the most similar source and target tables for easy lineage configuration.

Quickly identify the root cause of data quality issues with DQOps’ data lineage capabilities.

- Easily identify the origins of quality issues.

- Load of existing data lineages from Marquez without any manual setup required.

- Automatically identify the most similar source and target tables for easy lineage configuration.