Data quality monitoring for data ingestion

Ensure that your data sources are pulled correctly

Bad data ingested from external sources can have a cascading negative effect on your data pipelines and downstream analytics. These quality issues, ranging from missing values to formatting discrepancies, can lead to skewed results, flawed decision-making, and significant time wasted on data cleansing efforts.

DQOps platform allows you to monitor your ingestion tables and detect any changes in schema, format, or data delivery delays. This helps ensure that your data sources are being pulled correctly and consistently.

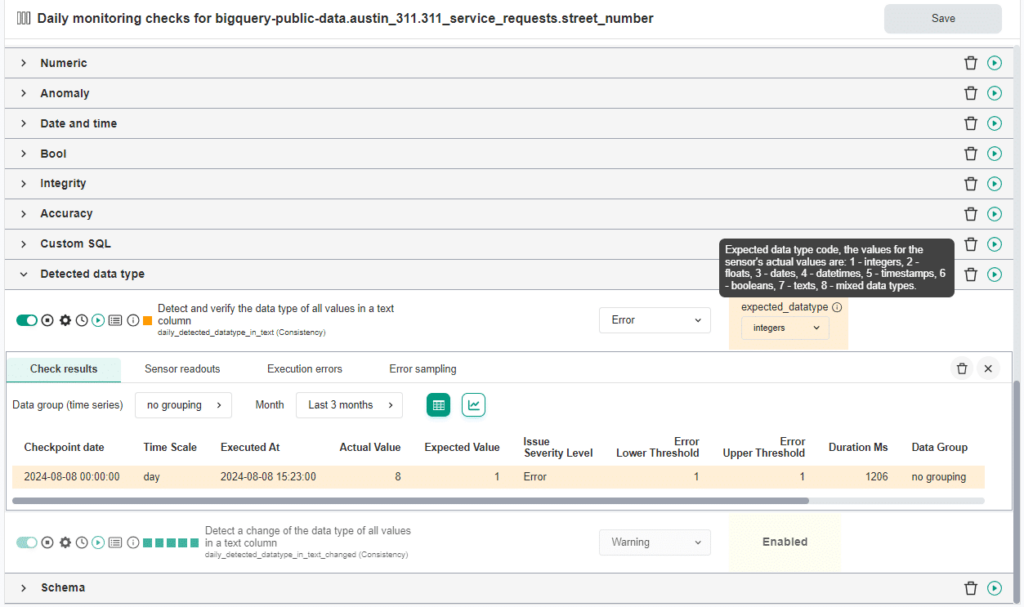

Data format and ranges

DQOps platform automatically validates your source data for issues such as data format, null values, ranges, and uniqueness after it loads into your ingestion tables.

- Choose from a variety of built-in data formats and range checks.

- Run data quality checks at the end of the data ingestion pipeline.

- Detect data format issues by identifying unexpected changes in the number of distinct column values.

DQOps platform automatically validates your source data for issues such as data format, null values, ranges, and uniqueness after it loads into your ingestion tables.

- Choose from a variety of built-in data formats and range checks.

- Run data quality checks at the end of the data ingestion pipeline.

- Detect data format issues by identifying unexpected changes in the number of distinct column values.

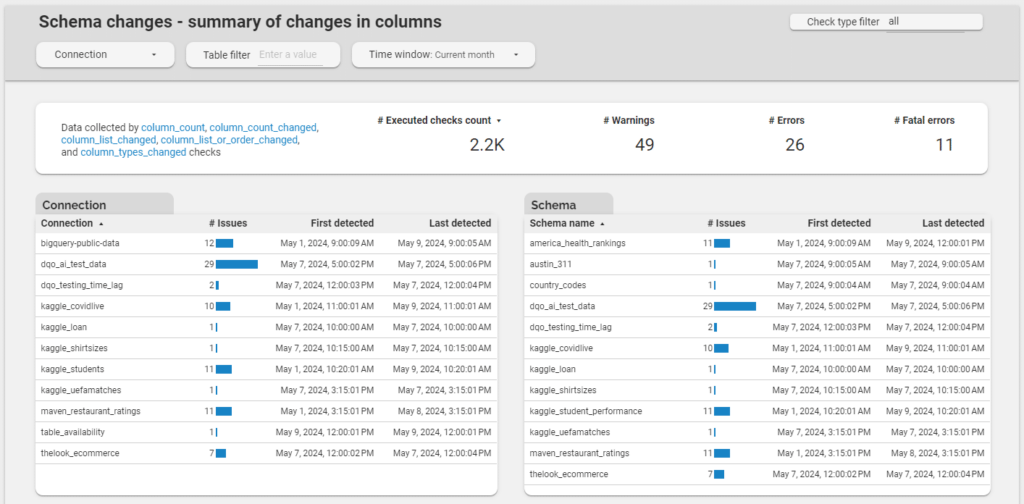

Schema change detection

Schema change detection

Automatically detects data quality issues for all types of schema changes.

- Monitor tables for changes such as missing columns, data type changes, or reordered columns.

- Detect when the column order changes in CSV or Parquet files.

- Review recent schema changes on built-in dashboards.

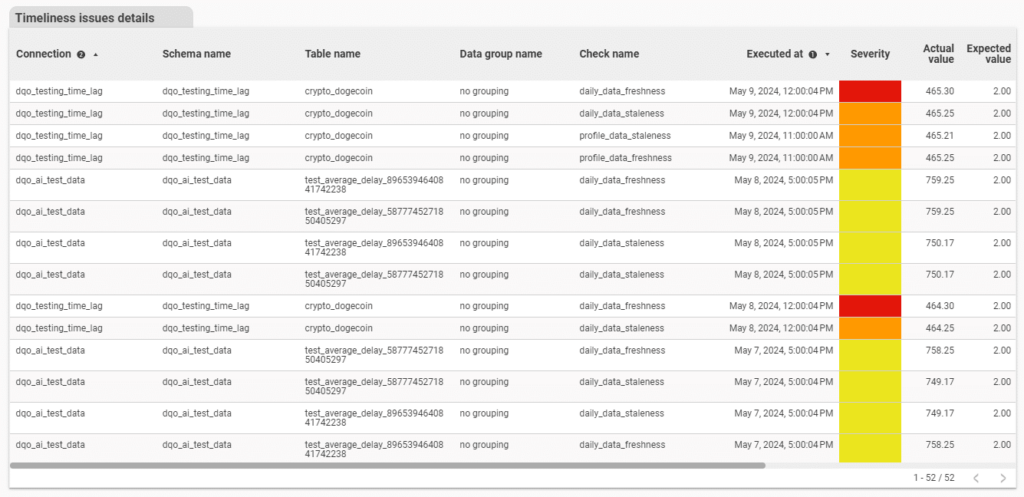

Data delays and stale tables

DQOps incorporates multiple in-built checks to ensure data timeliness. You can measure the duration between the last refresh of the data warehouse, known as data staleness. Or monitor ingestion delay, which refers to the delay between each pipeline run.

- Identify tables that have not been refreshed recently.

- Quickly configure timeliness checks with a user-friendly interface.

- Track and review all types of timeliness issues from multiple angles with built-in dashboards.

DQOps incorporates multiple in-built checks to ensure data timeliness. You can measure the duration between the last refresh of the data warehouse, known as data staleness. Or monitor ingestion delay, which refers to the delay between each pipeline run.

- Identify tables that have not been refreshed recently.

- Quickly configure timeliness checks with a user-friendly interface.

- Track and review all types of timeliness issues from multiple angles with built-in dashboards.

Ground truth checks

Ground truth checks

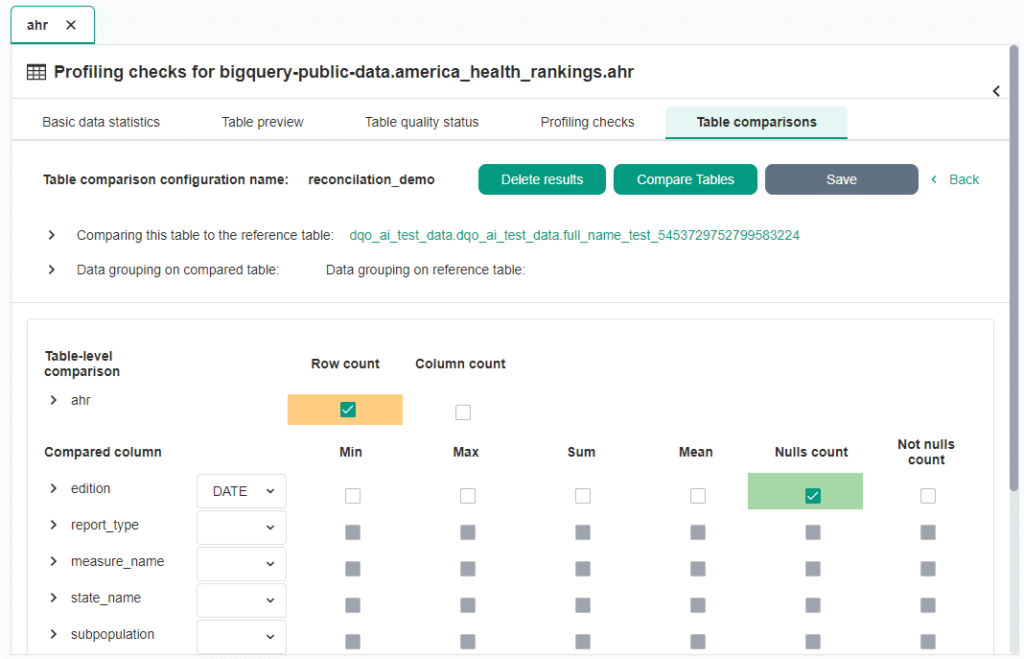

DQOps platform can run accuracy checks that will compare the table with reference data. Just configure table comparison using a user-friendly interface. You can also compare the data with flat files that you can load to a database or directly to DQOps.

- Compare the data with the real-world reference data.

- Verify the expected row and column count for data integrity between tables.

- Compare sums, minimums, maximums, averages, and null values between corresponding columns in different tables.