Data quality monitoring for data governance

Monitor data quality through unified data quality metrics

Inconsistent data quality processes can make it difficult to enforce governance rules. This inconsistency and bad data itself create confusion, lead to unreliable insights, waste resources, and undermine trust in the information used for decision-making.

DQOps is a centralized platform for data quality monitoring, even in distributed environments. Observe data quality across different public and private clouds, ensuring all your databases adhere to the same standards.

Streamlined data quality practices

Simplified adoption

Effortlessly integrate data quality monitoring into your data pipelines, encouraging adoption among data teams.

Unified metrics

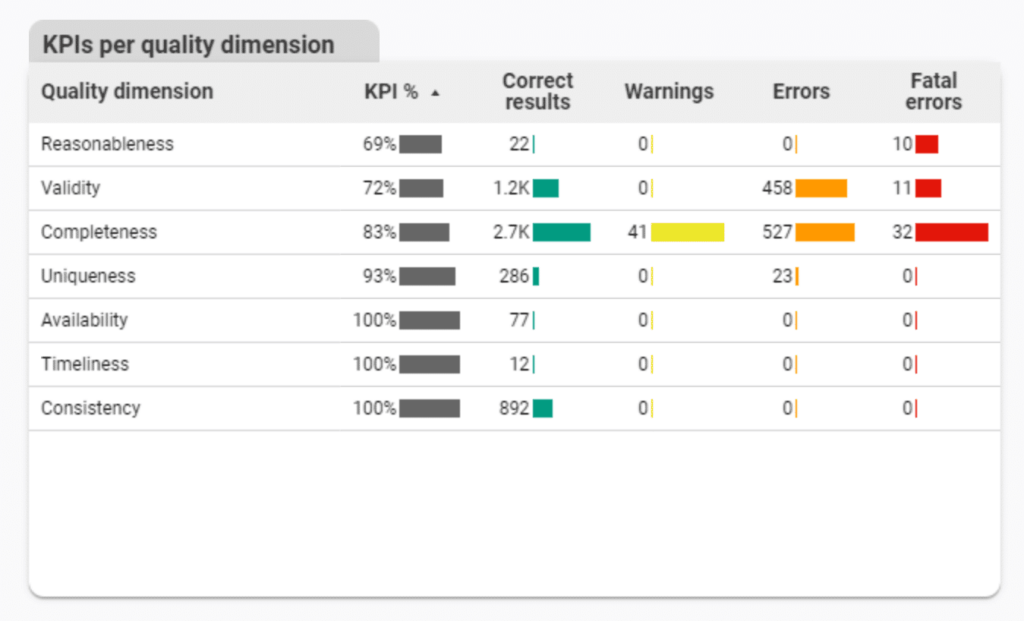

Monitor the same dimensions and compare the same data quality KPIs to promote consistent data quality practices across all data teams.

Database cross checks

Maintain data integrity across databases by comparing tables for potential discrepancies and missing values.

Unified data quality monitoring

DQOps platform supports monitoring of popular data quality dimensions such as validity, availability, reliability, timeliness, uniqueness, reasonableness, completeness, and accuracy across all major databases.

- Create a set of checks that will be automatically run on all your databases.

- Keep track of data quality KPIs with data quality dashboards.

- Analyze data quality across the enterprise.

DQOps platform supports monitoring of popular data quality dimensions such as validity, availability, reliability, timeliness, uniqueness, reasonableness, completeness, and accuracy across all major databases.

- Create a set of checks that will be automatically run on all your databases.

- Keep track of data quality KPIs with data quality dashboards.

- Analyze data quality across the enterprise.

Standardized data quality checks

Standardized data quality checks

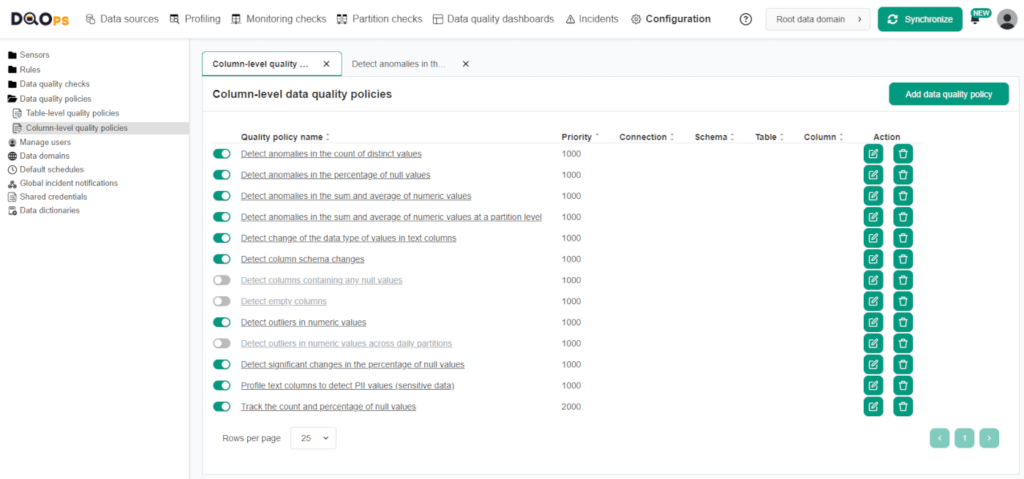

Consistent checks ensure consistent measurement and simplify enforcement of data governance rules. Create a data quality policy with a standard set of data quality checks for all databases. Modify built-in checks or create custom checks for specific needs.

- Use the same checks to verify data quality in all databases and data lakes.

- Customize data quality checks to suit your specific requirements.

- Define custom quality checks using templated SQL queries (Jinja2 compatible), Python code, or Java classes for advanced scenarios.

Data quality documentation

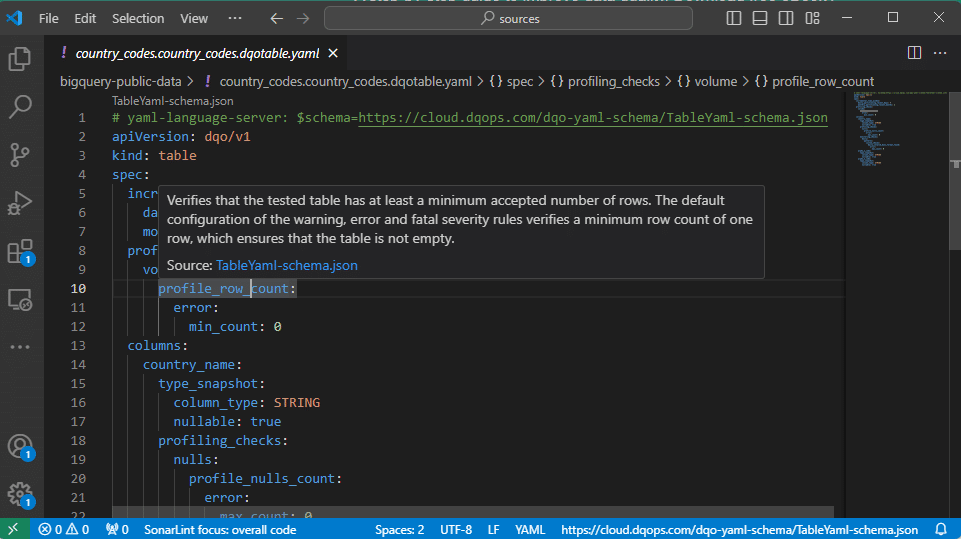

In DQOps platform, data quality check specification is defined in the YAML files. The specifications are easy to read and clearly show the type of checks and their expected thresholds for different alert severity levels.

- Use data quality specification files as data quality documentation

- Store data quality specification files in a code repository along with your data science and data pipeline code.

- Track changes in data quality requirements by comparing specification files in Git.

In DQOps platform, data quality check specification is defined in the YAML files. The specifications are easy to read and clearly show the type of checks and their expected thresholds for different alert severity levels.

- Use data quality specification files as data quality documentation

- Store data quality specification files in a code repository along with your data science and data pipeline code.

- Track changes in data quality requirements by comparing specification files in Git.