Data quality monitoring for data engineers

Prevent loading invalid data through your data pipelines

Managing data quality manually across various cloud environments and outside of data pipelines is inefficient and can lead to errors in downstream analysis. Proper integration with version control systems is necessary to track changes and manage versions of data quality code.

DQOps platform supports distributed agents for seamless monitoring. Integrate DQOps directly into your data pipelines to automatically halt processing when critical source data exhibits severe quality issues. All data configurations are stored in human-readable YAML files, making modification and version control easy with Git.

Ensure data integrity throughout your pipelines

Source data checks

Identify data quality problems in source data before loading it into your pipelines, saving time and effort.

Over 150 built-in data quality checks in DQOps verify the most common data quality issues.

Pipeline data checks

Guarantee successful data processing by detecting and addressing issues within your pipelines.

Run data quality checks to detect missing or incomplete data and validate successful data replication or migration with table comparison.

Customizable checks

Tailor built-in data quality checks to your specific needs.

Develop your own data quality checks using templated Jinja2 SQL query and Python rules.

Effortless integration

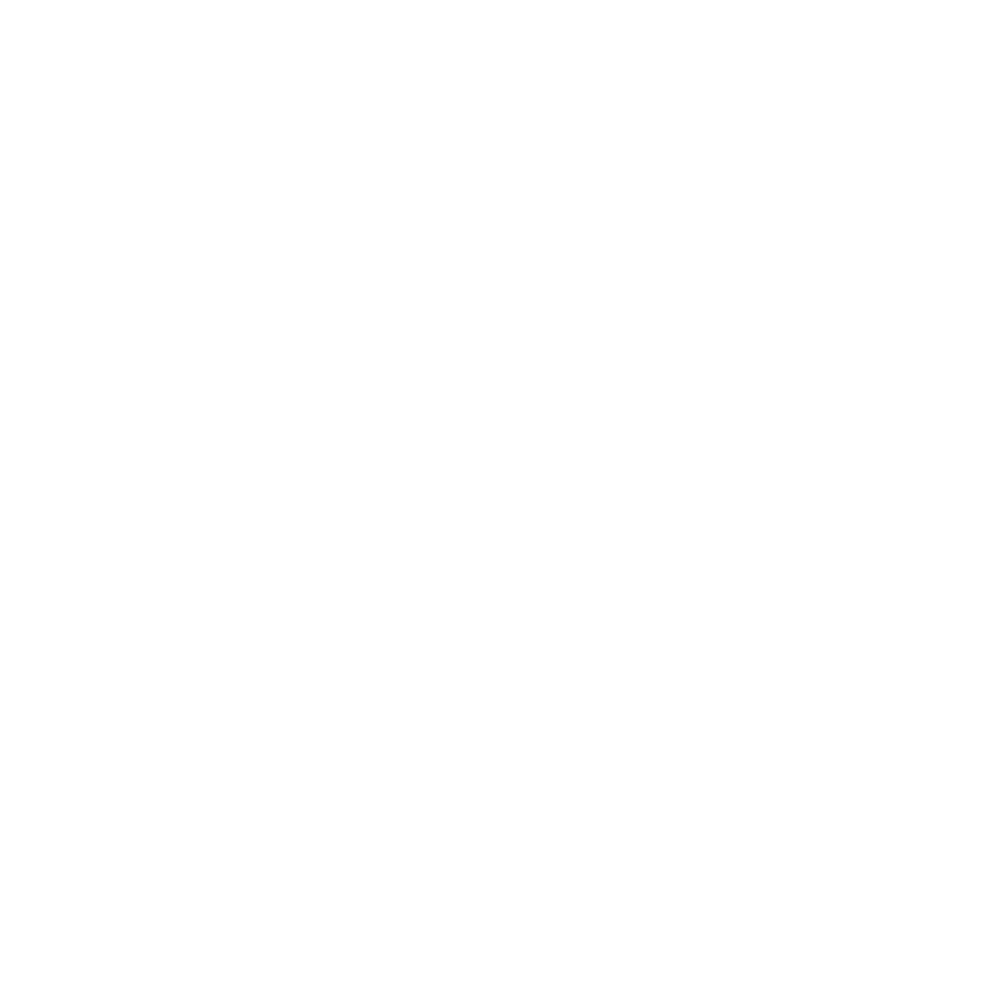

The DQOps platform stores all data quality configurations in human-readable YAML files. Using REST API Python client, you can run data quality checks from data pipelines and integrate data quality into Apache Airflow.

- Store data configuration in Git.

- Edit data quality configuration in popular code editors.

- Automate any operation visible in the user interface with a Python client.

The DQOps platform stores all data quality configurations in human-readable YAML files. Using REST API Python client, you can run data quality checks from data pipelines and integrate data quality into Apache Airflow.

- Store data configuration in Git.

- Edit data quality configuration in popular code editors.

- Automate any operation visible in the user interface with a Python client.

Data Lineage Protection

Data Lineage Protection

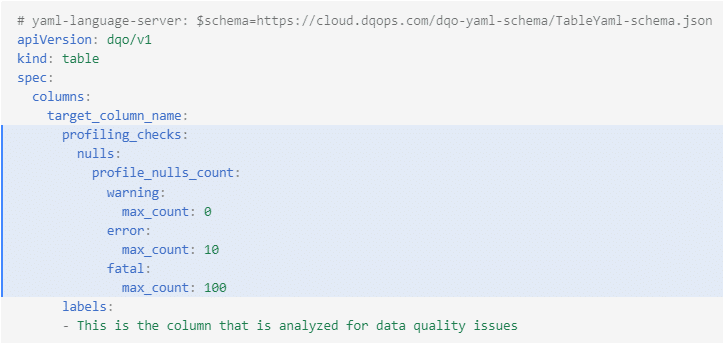

Monitor data quality throughout the entire data journey. Use built-in dashboards for quick issue review and root cause identification.

- Ensure each step of data ingestion is functioning properly

- Identify the root cause of the issue.

- Fix problems with data sources.

Granular pipeline control

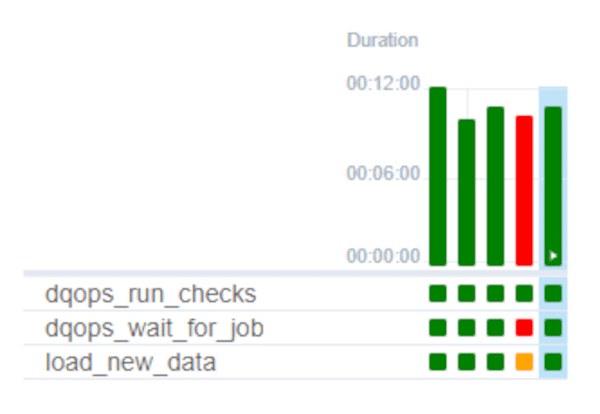

Integrate DQOps easily with scheduling platforms to halt data loading when severe quality issues arise. Once issues are resolved, resume processing seamlessly.

- Monitor data quality directly in the data pipelines.

- Integrate DQOps with Apache Airflow or Dbt.

- Prevent bad data from entering your pipelines.

Integrate DQOPs easily with scheduling platforms to halt data loading when severe quality issues arise. Resume processing seamlessly once issues are resolved.

- Monitor data quality directly in the data pipelines.

- Integrate DQOps with Apache Airflow or Dbt.

- Prevent bad data from entering your pipelines.